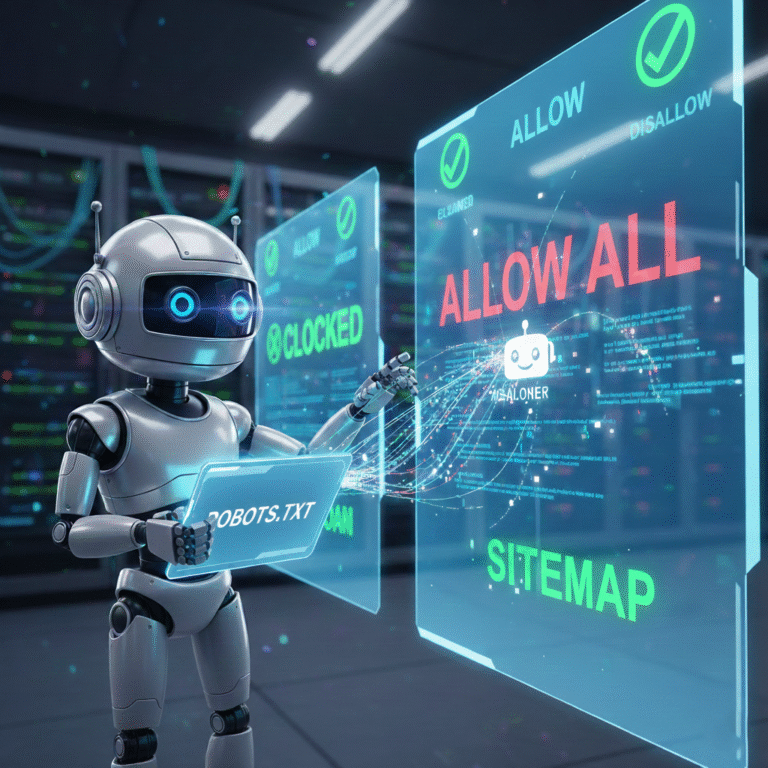

A robots.txt file is a plain text document that lives in your website’s root directory and tells search engine crawlers which pages they can or cannot access. Think of it as a set of instructions for web robots like Googlebot and Bingbot. This robots exclusion protocol helps you manage crawler permissions and control how search engines interact with your site.

Every website benefits from having a robots.txt file because it:

- Controls crawler access to specific pages

- Prevents indexing of duplicate content

- Protects sensitive information from appearing in search results

- Optimizes crawl budget for large websites

- Improves overall site crawling efficiency

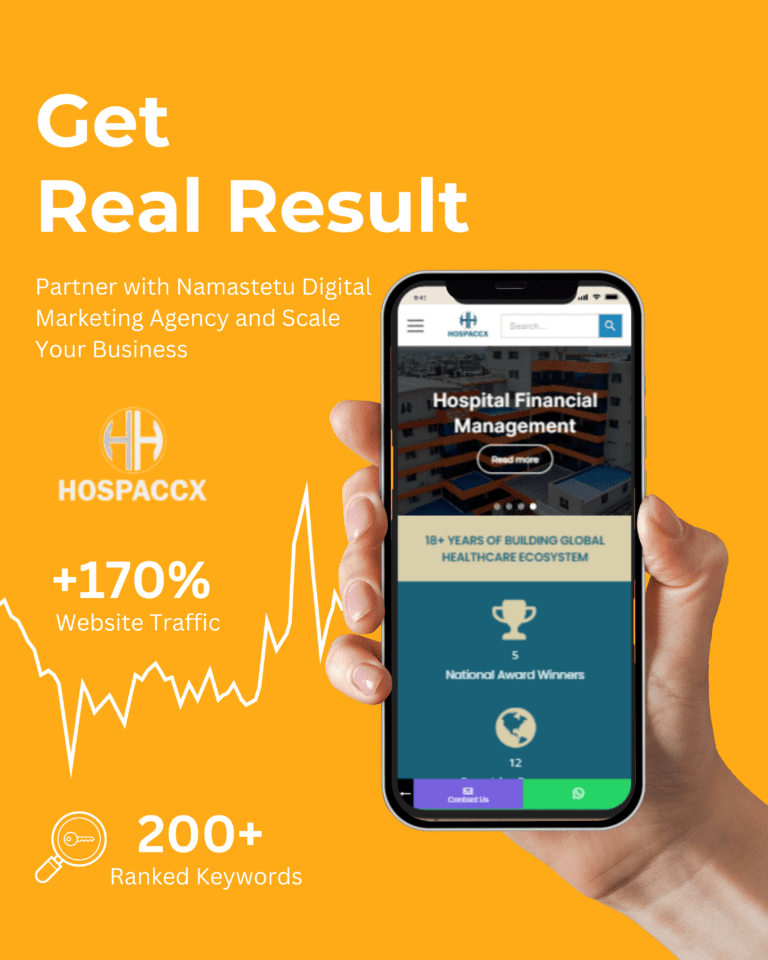

At Namastetu Technologies, our SEO company in Indore helps businesses implement proper robots.txt configuration as part of comprehensive SEO services in Indore. This ensures search engines focus on your most valuable content.

How Search Engines Use Robots.txt to Crawl Your Website

Search engine spiders like Googlebot check your robots.txt file before crawling any page on your website. When Bingbot or Googlebot visits your site, the first thing they do is look for yoursite.com/robots.txt. This crawl request handling process happens automatically and determines which crawler access patterns are allowed.

The crawling mechanism works like this:

- Search bot protocols require checking robots.txt first

- Crawler compliance depends on the directives you’ve set

- Bot user agents interpret the robots.txt parsing rules

- Crawling frequency adjusts based on your file’s instructions

A digital marketing agency in Indore understands that proper robots.txt implementation affects how search engines allocate their crawl budget. When working with an SEO agency in Indore, you’ll learn that controlling search engine indexing through robots.txt is essential for technical SEO optimization.

What Does Robots.txt Do for Your Website SEO

Robots.txt plays a critical role in SEO crawl optimization and indexation management. While it doesn’t directly boost rankings, it indirectly impacts your search visibility control by helping search engines crawl your site more efficiently. This crawl budget efficiency means Googlebot spends more time on your important pages rather than wasting resources on duplicate content handling.

Key SEO benefits include:

- Crawl Budget SEO: Saves resources for high-priority pages

- Indexing optimization: Prevents low-value pages from being indexed

- SEO technical optimization: Improves site architecture clarity

- Page indexation control: Manages which content appears in search results

Our website development agency in Indore at Namastetu Technologies integrates robots.txt setup during the development phase, ensuring your SEO crawling strategy is optimized from day one. This proactive approach to SEO crawl management helps businesses achieve better search performance and organic visibility.

Why Robots.txt Matters for Your Website’s Search Visibility

Your website’s search engine visibility depends on effective crawler access management. Without proper robots.txt configuration, you risk having low-quality pages indexed while important content gets overlooked. This impacts your organic search presence and SERP appearance control.

Search discoverability improves when you:

- Control which pages search engines can access

- Prevent crawler visibility on admin pages

- Optimize website findability for valuable content

- Manage search exposure strategically

When partnering with a digital marketing agency near me like Namastetu Technologies, you gain access to expert SEO services in Indore that understand how robots.txt visibility impact affects your search presence management. Our app development agency in Indore also ensures mobile apps with web components have proper indexing visibility settings.

What is a Robots.txt File and How It Controls Crawlers

A robots.txt file is a plain text document using ASCII text file format. This crawler instruction file contains crawler directives that specify bot control mechanisms through user agent targeting. The file structure includes disallow directive and allow directive commands that enable bot access rules.

Basic robots.txt structure:

User-agent: *

Disallow: /admin/

Allow: /public/

This robots exclusion standard uses:

- User agent specification: Targets specific bots

- Allow disallow rules: Controls crawler permissions

- Wildcard patterns: Applies rules to multiple URLs

- Crawler filtering: Manages bot management effectively

A professional SEO company in Indore ensures your crawler access control is configured correctly using robots.txt commands that match your business goals.

How to Optimize Robots.txt for Maximum Crawl Budget

Crawl budget allocation is critical for large websites. Crawler resource optimization through robots.txt helps with crawl efficiency maximization by blocking low-value page blocking and managing crawl priority management.

Pages to block for crawl budget preservation:

- Admin panel pages (wp-admin, /admin/)

- Login and registration pages

- Internal search result pages

- Duplicate content URLs

- Parameter URL blocking for filters

- Thank you and confirmation pages

- Cart and checkout process pages

At Namastetu Technologies, our digital marketing company in Indore implements crawl budget best practices that reduce wasted crawls through strategic crawl demand optimization. This crawler efficiency approach to indexing resource management ensures maximum crawl budget strategy effectiveness.

How to Implement Robots.txt on Multiple Domains

Multi-domain management requires separate robots.txt files for each domain. Subdomain configuration needs its own crawler directives since subdomains are treated as separate sites. Cross-domain implementation means creating domain-specific rules for each property in your domain portfolio management.

Implementation strategy:

- Create separate robots files for each domain

- Configure subdomain robots.txt independently

- Apply domain-level directives based on site purpose

- Set staging environment control to block test domains

- Manage production domain rules differently

Whether you need a website development agency in Indore or app development agency in Indore, proper multisite robots configuration is essential for international domain setup and domain robots setup across your web properties.

How a Digital Marketing Agency in Indore Helps with Robots.txt Optimization

Working with a professional digital marketing agency in Indore like Namastetu Technologies ensures your robots.txt file is optimized for maximum SEO performance. Our comprehensive SEO services in Indore include:

- Technical SEO audit to identify robots.txt issues

- Crawl budget optimization for better search engine efficiency

- Social media integration with proper crawler directives

- Website development with built-in robots.txt best practices

- App development with web component indexing control

As a leading SEO agency in Indore, we understand that proper robots.txt configuration is just one piece of the puzzle. Our holistic approach combines technical SEO, content optimization, and strategic planning to improve your search visibility and organic traffic.

FAQ Section

What is a robots.txt file?

A robots.txt file is a text file format document in your root directory that provides crawler instruction using the robots exclusion protocol file. It’s a web standard file that controls bot access to your site.

What happens if my website doesn’t have a robots.txt file?

Missing robots.txt results in default crawler behavior with unrestricted crawling. The no robots file impact means crawler default access to all pages without absence consequences, leading to unrestricted bot access.

Is robots.txt required for small websites?

For small site requirements, robots.txt isn’t mandatory but helps with basic crawler management. Small business websites benefit from simple site structure control and basic website setup practices.

How do I know if my robots.txt file is working?

Use robots.txt testing tools like Google Search Console testing for file validation. The robots tester tool provides syntax validation and directive testing through crawler simulation.

Can search engines ignore robots.txt instructions?

Yes, robots.txt compliance is voluntary protocol. While major search engines show crawler adherence, directive enforcement isn’t absolute. Crawler respect varies, and protocol compliance isn’t guaranteed for all bots.

Does robots.txt affect my website’s organic traffic?

Robots.txt traffic impact can affect organic traffic effects if configured incorrectly. Traffic loss robots.txt occurs when blocking important pages, but proper visitor impact robots.txt management improves search traffic robots performance.

Which pages should I block to save crawl budget?

Block pages robots.txt should include admin page blocking, duplicate page management, parameter URL blocking, internal search pages, and login page exclusion for crawl budget blocking efficiency.

Conclusion

Understanding robots.txt is essential for anyone serious about SEO and website optimization. This powerful file controls how search engines interact with your site, affecting everything from crawl budget to search visibility. Whether you’re managing a small blog or a large e-commerce platform, implementing robots.txt correctly helps search engines focus on your most valuable content.

If you’re looking for expert SEO services in Indore or need help with technical SEO implementation, Namastetu Technologies is your trusted digital marketing agency near me. Our team of SEO specialists ensures your robots.txt file and overall technical SEO foundation are optimized for success.

Ready to optimize your website’s robots.txt file? Contact Namastetu Technologies today for professional SEO services in Indore and take your search visibility to the next level!